How Do We Test Lighthouse?

Exploring the tests that keep Lighthouse stable

When I started Lighthouse, it was a personal project. Yet, one of the first things I did was to add a CI Pipeline and write tests. Most of the backend was created using TDD, so it’s safe to say that tests were on our minds from the get-go.

In this post, I’d like to explore how Lighthouse is tested and try to explain what purpose the different tests serve.

Lighthouse is the flagship product of Let People Work. It allows you to forecast completion dates with confidence and supports a wide range of scenarios, including projects with multiple teams and handling features that are not broken down yet.

It’s free to use, is open-source, and doesn’t need any cloud connection.

Join our Slack Community to learn from other users and become part of the development.

Why Bother With Tests?

You may wonder, if this used to be a personal project, why was I doing tests from the very beginning? The reason is simple: I enjoy writing tests. I don’t see this as a burden. I think it’s a great feeling if you wrote a test for a new feature, implemented the functionality, and now this and all other tests are green.

Or if you just reproduced a bug through an automated test. Yes, just reproducing the bug via test is already a great feeling. Because that means we have a clear indication that it’s fixed (when the test passes). And that this specific problem won’t happen again. Ever.

In my experience, many developers dislike tests. At best, they accept that they have to write them. Instead of embracing what great tests allow you to do (change your code with confidence!), they add them on top. Because someone expects the code coverage to be at least <add random number here>. I’m aware that’s by far not true for many developers and companies, but it’s something that I have experienced more often than developers being excited about tests…

Can I Trust This Change?

Building up a decent test suite, which covers various aspects of the Lighthouse functionality has been instrumental in evolving the application over time. There have been several bigger refactorings, but also the occasional bug that slips through and is discovered later.

Knowing that you have a set of tests that will make sure you will not break anything that used to work is very calming. It allows me to make aggressive changes, refactor as I see fit, and occasionally try something, with very limited risk.

Interestingly enough, it’s often not during refactoring that tests start to fail. But it has happened several times that I changed something and a seemingly unrelated test suddenly failed. I would be lying if often my first thought would not be: “Oh, I probably need to adjust the test so it passes again”, contemplating what I could change to make the test more reliable. Only to then find out that I just unintentionally broke something.

Can We Trust This Release?

As a user of Lighthouse (if you’re not yet - head over to https://letpeople.work/lighthouse to learn more!), you also get something out of this. Maybe you just don’t want to deal with amateur software, that continuously breaks. One release something works, another time this is suddenly broken. Once an application gets a reputation for being unreliable, it’s hard to get rid of it.

What’s more, because Lighthouse is free and open-source, many people associate this with “unprofessional”. While many open-source projects are just hobby projects, probably a lot of open-source is done more professionally than many paid software applications and services. As our target audience with Lighthouse is also corporations (or people within corporations that want to make a change in how they forecast), it’s an advantage that we can show that things are properly tested. On many levels. Continuously.

So let’s dive deeper into this.

What Do We Test?

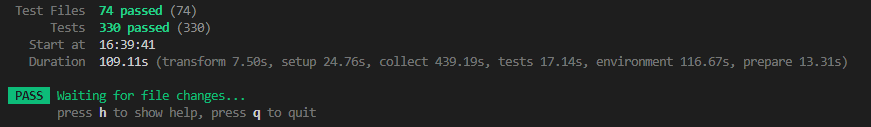

Lighthouse has unit-, integration- and end-to-end tests. They are all run continuously; every time a change is pushed, it’s run. And if a single test fails somewhere along the pipeline, we immediately know something broke.

Backend

The Lighthouse Backend is built in ASP.NET Core in C#. Most classes were written using TDD and therefore are unit-tested, leading to a high code coverage (not that this tells us that much). You can also see this for yourself on SonarQube Cloud.

In addition to the Unit Tests, also some integration tests are run. As Lighthouse works with data coming from both Jira and Azure DevOps, there is a hard dependency on those services. And while this can be mocked away to test most things, we test that those services also return the expected data, by having set up demo projects for each and making sure we get what we expect.

Frontend

The front end is built in React, and we’re using vitest for testing. In general, every component should be covered by tests. Compared to the backend, the coverage is not as good (see SonarQube Cloud). The main reason for this is that I had no prior experience with React, so a lot of focus was simply getting it to run, sometimes creating suboptimal code that was harder to test. That said, as we continue to add features, fix bugs, and refactor in the process, the coverage has increased over the last months.

End-To-End Tests

For quite a while, the Unit- and Integration tests for the back- and the front end was all there was. However, we noticed at one point that, while still everything is green, sometimes things break because the individual units were working, but put together something was still off.

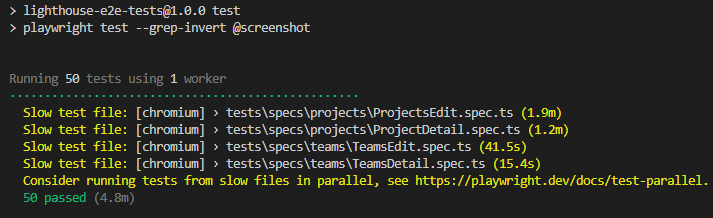

So we added end-to-end UI Tests using Playwright. This allows us to spin up the whole application (assuming the unit- and integration tests were running ok), and test end-to-end scenarios. Those tests interact via the Browser with the Frontend and work against the real Backend, which in turn gets data from a real Jira and Azure DevOps instance.

Using some neat features like fixtures, we can make sure each test runs in isolation (so it does not depend on other tests and can be run in any order), while also setting up Lighthouse into a known state (for example by making sure certain Teams or Projects are added).

Note that we always prefer to run unit or integration tests. End-to-end tests are expensive because they are slow to run. We try to make sure the basic scenarios work but don’t try to cover everything.

Deploy The Demo App

If all other tests have been successful, we are then deploying the app as part of the CI Pipeline to our demo environment. This is not an automated test, but it helps to check if the app behaves as we would expect in the demo environment with the sample data.

Publish A Docker Image

In parallel with the deployment of the Demo App, docker images with the tag dev-latest are created and pushed to the GitHub Container Registry.

After the package is pushed, the end-to-end tests are run against this docker image. This makes sure we do not have any different behavior between the regular package and the one that is deployed in docker.

The automatic deployment of the Demo App as well as the docker image also simplifies the manual verification. Getting the latest version is just one click or one command away, so there is not really an excuse to not verify each individual change.

Conclusion

Only if all the above-mentioned tests were run, do we consider releasing a new package. The tests give us a lot of confidence to do this often, and history has shown that we can trust our test suite to tell us if we just broke something.

Testing has been an integral part of Lighthouse from its first commits, and we’re happy we’ve invested in this early on, everything else would be unprofessional.